Tuesday, June 28, 2011

Saturday, June 25, 2011

Math Forum - Ask Dr. Math

inf means "infimum," or "greatest lower bound." This is slightly different from minimum in that the greatest lower bound is defined as: x is the infimum of the set S [in symbols, x = inf (S)] iff: a) x is less than or equal to all elements of S b) there is no other number larger than x which is less than or equal to all elements of S. Basically, (a) means that x is a lower bound of S, and (b) means that x is greater than all other lower bounds of S.

Friday, June 24, 2011

Thursday, June 23, 2011

Data mining Blog, Preprocessing, Normalization

Before we normalize the data set, we need to check for outliers

There are two possible ways to handle outliers in the data

1. Ignore them, remove from the data set (or)

2. Reassign the value to of appropriate upper or lower threshold.

Thursday, June 16, 2011

Thursday, June 9, 2011

Thursday, June 2, 2011

Monday, May 9, 2011

Friday, May 6, 2011

Monday, May 2, 2011

Stat 375 – Inference in Graphical Models

Stat 375 – Inference in Graphical Models

| Graphical models are a unifying framework for describing the statistical relationships between large collections of random variables. Given a graphical model, the most fundamental (and yet highly non-trivial) task is compute the marginal distribution of one or a few such variables. This task is usually referred to as ‘inference’. The focus of this course is on sparse graphical structures, low-complexity inference algorithms, and their analysis. In particular we will treat the following methods: variational inference; message passing algorithms; belief propagation; generalized belief propagation; survey propagation; learning. Applications/examples will include: Gaussian models with sparse inverse covariance; hidden Markov models (Viterbi and BCJR algorithms, Kalman filter); computer vision (segmentation, tracking, etc); constraint satisfaction problems; machine learning (clustering, classification); communications. |

Sunday, May 1, 2011

Thursday, April 28, 2011

Wednesday, April 27, 2011

Classifier Showdown « Synaptic

Saturday, April 23, 2011

Friday, April 22, 2011

Tuesday, April 19, 2011

math - Mathematics for AI/Machine learning ? - Stack Overflow

- Logic - An Investigation of the Laws of Thought (Boole) and Set Theory and Logic (Stoll)

- Computation - Introduction to the Theory of Computation (Sipser)

- Probablility -

algorithm - Help Understanding Cross Validation and Decision Trees - Stack Overflow

Monday, April 18, 2011

Notes on Path Finding problem

astar c.)matrix[u][v] = 1 denotes an edge between u and v, and matrix[u][v] = 0 denotes no edge between u and v. Then (matrix)^3 (just simple matrix exponentiation) is 'magically' the path matrix of exactly length 3.- An efficient implementation of Dijkstra's algorithm takes O(Elog V) time for a graph with E edges and V vertices.

- Hosam Aly's "flood fill" is a breadth first search, which is O(V). This can be thought of as a special case of Dijkstra's algorithm in which no vertex can have its distance estimate revised.

- The Floyd-Warshall algorithm takes O(V^3) time, is very easy to code, and is still the fastest for dense graphs (those graphs where vertices are typically connected to many other vertices). But it'snot the right choice for the OP's task, which involves very sparse graphs.

Raimund Seidel gives a simple method using matrix multiplication to compute the all-pairs distance matrix on an unweighted, undirected graph (which is exactly what you want) in the first section of his paper On the All-Pairs-Shortest-Path Problem in Unweighted Undirected Graphs [pdf].

(This is not exactly the problem that I have, but it's isomorphic, and I think that this explanation will be easiest for others to understand.)

Suppose that I have a set of points in an n-dimensional space. Using 3 dimensions for example:

A : [1,2,3] B : [4,5,6] C : [7,8,9] I also have a set of vectors that describe possible movements in this space:

V1 : [+1,0,-1] V2 : [+2,0,0] Now, given a point dest, I need to find a starting point p and a set of vectors moves that will bring me todest in the most efficient manner. Efficiency is defined as "fewest number of moves", not necessarily "least linear distance": it's permissible to select a p that's further from dest than other candidates if the move set is such that you can get there in fewer moves. The vectors in moves must be a strict subset of the available vectors; you can't use the same vector more than once unless it appears more than once in the input set.

My input contains ~100 starting points and maybe ~10 vectors, and my number of dimensions is ~20. The starting points and available vectors will be fixed for the lifetime of the app, but I'll be finding paths for many, many different dest points. I want to optimize for speed, not memory. It's acceptable for the algorithm to fail (to find no possible paths to dest).

Update w/ Accepted Solution

I adopted a solution very similar to the one marked below as "accepted". I iterate over all points and vectors and build a list of all reachable points with the routes to reach them. I convert this list into a hash of <dest, p+vectors>, selecting the shortest set of vectors for each destination point. (There is also a little bit of optimization for hash size, which isn't relevant here.) Subsequent dest lookups happen in constant time.

Sunday, April 17, 2011

Regularization for high dimensional learning Course

www.disi.unige.it/dottorato/corsi/RegMet2011/

Thursday, April 7, 2011

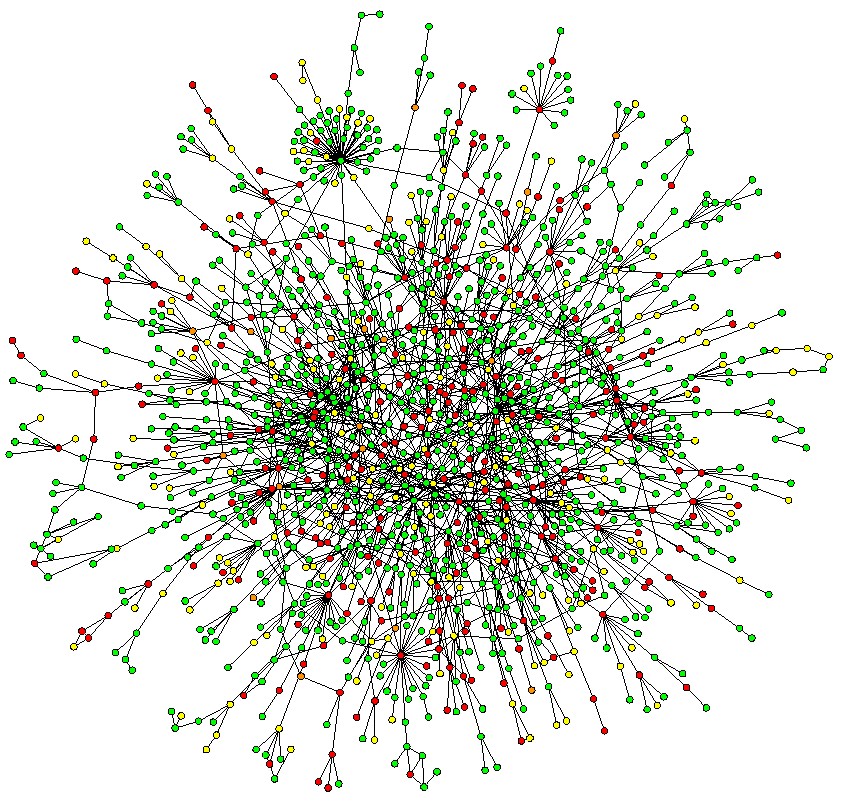

Welcome to Social Web Mining Workshop, co-located with IJCAI 2011

International Workshop on Social Web Mining

Co-located with IJCAI, 18 July 2011, Barcelona, Spain

Sponsored by PASCAL 2

News: the submission deadline has been extended to 20 April 2011.

Introduction:

There is increasing interest in social web mining, as we can see from the ACM workshop on Social Web Search and Analysis. It is not until recently that great progresses have been made in mining social network for various applications, e.g., making personalized recommendations. This workshop focuses on the study of diverse aspects of social networks with their applications in domains including mobile recommendations, service providers, electronic commerce, etc.

Social networks have actually played an important role in different domains for about a decade, particularly in recommender systems. In general, traditional collaborative filtering approaches can be considered as making personalized recommendations based on implicit social interaction, where social connections are defined by some similarity metrics on common rated items, e.g., movies for the Netflix Prize.

With the recent development of Web 2.0, there emerges a number of globally deployed applications for explicit social interactions, such as Facebook, Flickr, LinkedIn, Twitter, etc. These applications have been exploited by academic institutions and industries to build modern recommender systems based on social networks, e.g., Microsoft's Project Emporia that recommends tweets to user based on their behaviors.In recent years, rapid progress has been made in the study of social networks for diverse applications. For instance, researchers have proposed various tensor factorization techniques to analyze user-item-tag data in Flickr for group recommendations. Also, researchers study Facebook to infer users' preferences.

However, there exist many challenges in mining social web and its application in recommender systems. Some are:

- What is the topology of social networks for some specific application like LinkedIn?

- How could one build optimal models for social networks such as Facebook?

- How can one handle the privacy issue caused by utilizing social interactions for making recommendation?

- How could one model a user's preferences based on his/her social interactions?

Topics:

The workshop will seek submissions that cover social networks, data mining, machine learning, and recommender systems. The workshop is especially interested in papers that focus on applied domains such as web mining, mobile recommender systems, social recommender systems, and privacy in social web mining. The following list provides examples of the types of areas in which we encourage submissions. The following comprises a sample, but not complete, listing of topics:

- Active learning

- Matchmaking

- Mobile recommender systems

- Multi-task learning

- Learning graph matching

- Learning to rank

- Online and contextual advertising

- Online learning

- Privacy in social networks

- Preference learning or elicitation

- Social network mining

- Social summarization

- Tag recommendation

- Transfer learning

- Web graph analysis

Louhi 2011

Wednesday, April 6, 2011

2011 IEEE GRSS Data Fusion Contest

WorldView-2 multi-spectral multi-angular acquisitions and

participating to the Contest. The deadline for the paper submission is

May 31, 2011. Final results will be announced in Vancouver (Canada) at

the 2011 IEEE International Geoscience and Remote Sensing Symposium.

Check the IGARSS 2011 abstract at http://slidesha.re/gLagLW

About the IEEE GRSS Data Fusion Contest:

The Data Fusion Contest has been organized by the Data Fusion

Technical Committee of the Geoscience and Remote Sensing Society of

the International Institute of Electrical and Electronic Engineers and

annually proposed since 2006. It is a contest open not only to IEEE

members, but to everyone.

This year the Data Fusion Contest aims at exploiting multi-angular

acquisitions over the same target area.

Five WorldView-2 multi-sequence images have been provided by

DigitalGlobe. This unique data set is composed by five Ortho Ready

Standard Level-2 WorldView-2 multi-angular acquisitions, including

both 16 bit panchromatic and multi-spectral 8-band images. The imagery

was collected over Rio de Janeiro (Brazil) on January 2010 within a

three minute time frame. The multi-angular sequence contains the

downtown area of Rio, including a number of large buildings,

commercial and industrial structures, the airport and a mixture of

community parks and private housing.

Since there are a large variety of possible applications, each

participant can decide the research topic to work with. Each

participant is required to submit a full paper in English of no more

than 4 pages including illustrations and references by May 31, 2011.

Final results will be announced in Vancouver (Canada) at the 2011 IEEE

International Geoscience and Remote Sensing Symposium.

2011 DigitalGlobe - IEEE GRSS Data Fusion Contest

--

♥ ¸¸.•*¨*•♫♪♪♫•*¨*•.¸¸♥

Monday, April 4, 2011

Greg Mankiw's Blog: Advice for Grad Students

- Don Davis gives some guidance about finding research topics.

- John Cochrane tells grad students how to write a paper.

- Michael Kremer provides a checklist to make sure your paper is as good as it can be.

- David Romer gives you the rules to follow to finish your PhD.

- David Laibson offers some advice about how the navigate the job market for new PhD economists.

- John Cawley covers the same ground as Laibson but in more detail.

- Kwan Choi office advice about how to publish in top journals.

- Dan Hamermesh offers advice on, well, just about everything.

- Assar Lindbeck tells you how, after getting that first academic post, to win the Nobel prize.

Saturday, April 2, 2011

Friday, April 1, 2011

Books for Reinforcement Learning:

--

Thursday, March 31, 2011

Machine Learning, etc: Graphical Models class notes

- Jeff Bilmes' Graphical Models course , gives detailed introduction on Lauritzen's results, proof of Hammersley-Clifford results (look in "old scribes" section for notes)

- Kevin Murphy's Graphical Models course , good description of I-maps

- Sam Roweis' Graphical Models course , good introduction on exponential families

- Stephen Wainwright's Graphical Models class, exponential families, variational derivation of inference

- Michael Jordan's Graphical Models course, his book seems to be the most popular for this type of class

- Lauritzen's Graphical Models and Inference class , also his Statistical Inference class , useful facts on exponential families, information on log-linear models, decomposability

- Donna Precup's class , some stuff on structure learning

Wednesday, March 30, 2011

MapReduce与自然语言处理

本文链接地址:http://www.52nlp.cn/mapreduce与自然语言处理

我接触MapReduce时间不长,属于初学者的级别,本没有资格在这里谈"MapReduce与自然语言处理"的,不过这两天刚好看了IBM developerWorks上的《用 MapReduce 解决与云计算相关的 Big Data 问题》, 觉得这篇文章有两大好处:第一,它有意或无意的给了读者不仅有价值而且有脉络的关于MapReduce的参考资料;第二,虽然文中没有直接谈"自然语言处 理",但是在最后的"下一步"引申中,它给关注MapReduce在文本处理的读者列出了一份与自然语言处理相关的参考资料,这些资料,相当的有价值。因 此对于"MapReduce或者并行算法与自然语言处理",结合这篇文章以及自己的一点点经验,我尝试在这里"抛砖引玉"一把,当然,仅仅是抛砖引玉。

MapReduce是Google定义的一套并行程序设计模式(parallel programming paradigm),由两名Google的研究员Jeffrey Dean和Sanjay Ghemawat在2004年时提出,二人目前均为Google Fellow。所以两位Google研究员当年的论文是MapReudce学习者的必读:

'Google 工程师发表的文章 "MapReduce: Simplified Data Processing on Large Clusters" 清楚地解释了 MapReduce 的工作方式。这篇文章导致的结果是,从 2004 年到现在出现了许多开放源码的 MapReduce 实现。'

同时在Google Labs上,有这篇文章的摘要和HTML Slides:

MapReduce is a programming model and an associated implementation for processing and generating large data sets. Users specify a map function that processes a key/value pair to generate a set of intermediate key/value pairs, and a reduce function that merges all intermediate values associated with the same intermediate key. Many real world tasks are expressible in this model, as shown in the paper.

MapReduce的发明者Jeff DeanJeffrey Dean和Sanjay Ghemawat在2008年时重新整理了这篇论文,并发表在"Communications of the ACM"上,有兴趣的读者可以在Portal ACM上找到:MapReduce: simplified data processing on large clusters。

另外,在Google Code University上,有一个比较正式的Mapreduce Tutorial:Introduction to Parallel Programming and MapReduce,写得稍微理论一些,不过也很值得参考。

关于MapReduce的开源实现,最有名的莫过于开源的Hadoop了。Hadoop是仅次于Google MapReduce系统的最早出现的MapReduce实现,它是几个Google工程师离开Google后用Java开发的。 如果读者对学习Hadoop感兴趣,除了参考Hadoop官方文档外,另外就是《Hadoop: The Definitive Guide: MapReduce for the Cloud》这本书了,我所见的一些资料里都推荐过这本书,另外包括IBM的这篇文章的参考资料里也列出了这本书,感觉的确不错!这本书的电子版也可以Google的出来,读者自助吧!

接下来就是"MapReduce与自然语言处理"了,IBM的这篇文章列出了如下的"自然语言处理"方面的参考资料:

· Natural Language Processing with Python以非常通俗的方式介绍自然语言处理。

· 在 NLP 上也有一篇文章。

· PDF 文档 how to do large-scale NLP with NLTK and Dumbo。

· 在 "可爱的 Python: 自然语言工具包入门" 中,David Mertz 讲解如何使用 Python 进行计算语言学处理。

我额外再推荐一本书:Data-Intensive Text Processing with MapReduce,作者Jimmy Lin是 马里兰大学的associate professor,目前结合MapReduce利用Hadoop在NLP领域发了一些paper,没有paper的读 者可要抓紧了,"并行算法与自然语言处理"这个切入点不错——开个玩笑!当然这本书的电子版Jimmy Lin已经在这个页面里提供了。

最后就是引用IBM所列的其他学习参考资料了,这些资料,很有价值,也为自己做个备份:

· developerWorks 文章 "在云中使用 MapReduce 和负载平衡" 提供关于在云中使用 MapReduce 的更多信息并讨论了负载平衡。

· how to install a distribution for Hadoop on a single Linux node教程讨论如何在单一 Linux 节点上安装 Hadoop 发行版。

· "A Comparison of Approaches to Large-Scale Data Analysis" 详细对比 MapReduce 和并行 SQL DBMS 的基本控制流。

· developerWorks 上的 "用 Hadoop 进行分布式数据处理" 系列帮助您开始开发应用程序,从支持单一节点到支持多个节点。

· 进一步了解本文中的主题:

o Erlang overview

o MapReduce and parallel programming

o Amazon Elastic MapReduce

o Hive and Amazon Elastic MapReduce

o Writing parallel applications (for thread monkeys)

o 10 minutes to parallel MapReduce in Python

o Implementing MapReduce in multiprocessing

o Disco, an alternative to Hadoop

o Hadoop: The Definitive Guide: MapReduce for the Cloud

o Business intelligence: Crunch data with Hadoop (MapReduce)

· 在 developerWorks 云开发人员参考资料 中,寻找应用程序和服务开发人员构建云部署项目的知识和经验,并分享自己的经验。

注:原创文章,转载请注明出处"我爱自然语言处理":http://www.52nlp.cn

--

Dumbo

Documentation

Sunday, March 27, 2011

clustering - Which machine learning library to use - Stack Overflow

Some Notes of SVM from S-O-F

As mokus explained, practical support vector machines use a non-linear kernel function to map data into a feature space where they are linearly separable:

Lots of different functions are used for various kinds of data. Note that an extra dimension is added by the transformation.

(Illustration from Chris Thornton, U. Sussex.)

The 2nd answer: YouTube video that illustrates an example of linearly inseparable points that become separable by a plane when mapped to a higher dimension

It is the equation of a (hyper)plane using a point and normal vector.

Think of the plane as the set of points P such that the vector passing from P0 to P is perpendicular to the normal

Check out these pages for explanation:

http://mathworld.wolfram.com/Plane.html

http://en.wikipedia.org/wiki/Plane_%28geometry%29#Definition_with_a_point_and_a_normal_vector